This article by David Walker, a serving Army Officer who is posted to Land Capability Division, describes how recent information management and organisational reforms within Defence’s capability development sector have inadvertently increased the likelihood of project failure and workforce fatigue. To assist the reader, David provides the concepts through which his argument will be presented, as well as the 'journey' of information management in Defence projects in recent times.

After framing his argument, David offers a solution based on automation enabled by standardisation of methodology, data structures, and tooling.

While this article is targeted for those posted to work on Defence projects, it is an interesting and thought- provoking read. David's thoughts and suggestions may be relevant to information management in other areas as well as within the capability management arena.

Click on the link below to access the article: A knowledge management crisis for capability development.

This article describes how recent information management and organisational reforms have inadvertently increased the likelihood of project failure and workforce fatigue. It then offers a solution based on automation enabled by standardisation of methodology, data structures, and tooling.

Executive Summary / Abstract / Bottom Line Up Front

Modern capability projects have literally millions of relationships that must be tracked; relationships between scenarios, activities, objectives, tasks, risks, systems, sub-systems, programs, people, organisations, concepts, technologies, research findings, tests, and much else. Furthermore, our projects must facilitate continuous knowledge transfer between dozens (even hundreds) of stakeholders and subject matter experts (SME). To develop world class capability, we need world class information management. We have a long way to go to achieving this. The 2015 First Principles Review (FPR) found that across Defence ‘processes are complicated, slow and inefficient… Waste, inefficiency and rework are palpable.’ Information management was identified as a key area of concern and one that will require ‘gradually building interoperability, compatibility and simplicity over time.’[i]

Since the FPR information management reforms have tended to focus on the information needs of strategic level decision makers (2-star and above). However, I propose that most of our problems emerge from poor information management at the project level. Most information for most projects is held in disconnected documents that would look familiar to capability project managers of the pre-digital age. Often, digitisation has involved little more than changing the storage medium, and even this modest progress is frequently negated by careless archiving and version control. Our information management is so bad, and our projects so complex that we are often overwhelmed by our own content. We consequently spend most of our time explaining and interpreting, and only a small fraction thinking and modelling. This imbalance is self-reinforcing, so we are accelerating on a downwards spiral.

On the plus side, there is an abundance of low hanging fruit. Shifting our information out of documents and into a standardised database could easily double the productivity of the workforce by enabling automation, reducing noise, and providing a common language to streamline our discourse.

Conceptual Framework

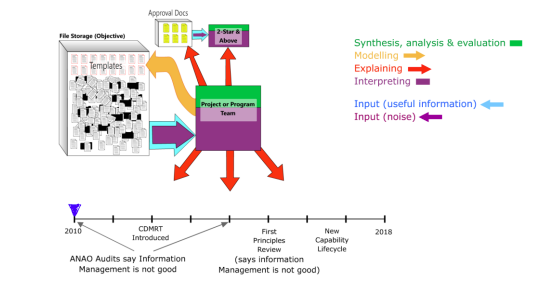

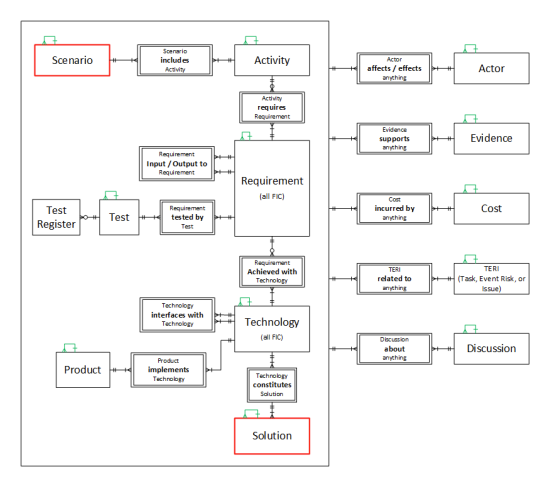

Before making this case in detail I will briefly explain the concepts through which my arguments will be presented. Figure 1 is a high-level representation of a project or program team’s information flows and cognitive activities.

Figure 1 / Information flows circa 2010

Thinking (Synthesis, analysis, and evaluation). Synthesis is putting things together to create feasible courses of action. Analysis is breaking things down, estimating causal relationships, and predicting outcomes. Evaluation is making value judgements about risk and potential outcomes. For convenience I will refer to these cognitive processes collectively as Thinking. Note this concept of Thinking excludes the thinking required for Modelling, Explaining, and Interpreting. For clarity throughout this article I capitalise the words Thinking, Modelling, Explaining, and Interpreting to indicate that I am using them in a particular context.

Modelling. Modelling is taken to be the creation of the project model, which is the single source of truth for the project. The fact that most projects tend not to have a single source of truth is a problem I will return to later. The project model is a simplified representation of all relevant things, actions, events, concepts, or combinations thereof. It is the capability designs, plans, and chain of evidence.

Explaining. This is converting the existing project model into a form (usually verbal or written) for a particular audience. The first time a project team codifies something it is Modelling. Every mention (written or verbal) thereafter is Explaining. I will shortly argue that if you get the Modelling right, you can automate most Explaining.

Interpreting (aka sense-making). This is making sense of inputs. Inputs may be instructions, orders, policies, requests, technical specifications, user requirements, judicial findings, literature, meeting minutes, phone conversations, and much else.

Information Quality. While it has no graphical representation here, Information Quality is an important concept. It is considered a product of accuracy, timeliness, completeness, and usability. Most importantly (and by the definitions provided), it is directly proportional to Thinking and Modelling.

Inputs. Depending on their quality, inputs contain various amounts of useful information (blue) and noise (purple). Noise is all the data that is not required, which consequently increases the interpretation effort.

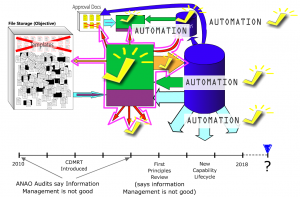

Templates. Templates are standardised information structures and partial solutions to known problems. They are used extensively across all industries for almost all forms of communication and record keeping. Figure 1 is showing that Models and approvals (circa 2010) were guided by file-based templates.

Approval documents. These are key artefacts of Explaining. They are given special consideration here for reasons that will become obvious.

File Storage. The black boxes containing file icons represent file storage. The Defence file storage system is called Objective. The disorderly pile of black files and folders in Figure 1 is intended to indicate that Objective contains many files containing information that is unstructured, disconnected, and (often) not well maintained. The orderly line of templates and approval documents is intended to show that it is possible to achieve some degree of structure within Objective using templates.

Database. Shown in Figure 2 (but not Figure 1), is a blue cylinder representing a database management system (DBMS), henceforth referred to simply as a database. A database stores structured information and can perform certain types of analysis, which is represented with a green arrow because it augments, facilitates, and replaces human Thinking.

The Journey to Knowledge Management Crisis

To understand our predicament, it is helpful to consider how we got here. Figure 1 represents a previous regime under which Modelling and approvals were based on a large suite of Word and Excel templates. Six or seven years ago General Caligari believed he was spending too much time Interpreting noisy inputs and not enough time Thinking about options (too much purple, not enough green). To remedy this, he introduced a database to provide precisely the information he needed in a format that he could quickly consume. That was the birth of the Capability Development Management and Reporting Tool (CDMRT).[ii]

CDMRT improved information management considerably at the 2-star level. As far as database applications go, CDMRT is a long way from state-of-the-art, but it is a great improvement on no [1]database. With the introduction of CDMRT four things happened:

- Projects shift some of their Explaining effort from reports and returns into database maintenance.

- The database now provides cleaner input to its intended users.

- Because they are getting clean inputs, they need to perform less Interpreting and have more time for Thinking.

- In addition, the database performs some of the analysis that was previously left to humans or (more likely) not being done.

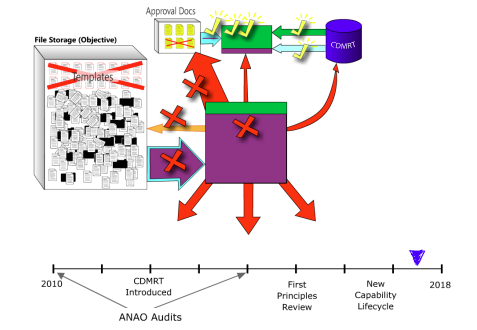

So CDMRT is a big improvement for 2-star and above. But, approval documents were still too noisy, quality of analysis and synthesis at the project and program level was still not satisfactory, and (because of these things), Government had lost trust in Defence. This situation led to the First Principles Review (FPR) and the New Capability Lifecycle (CLC). The New CLC has surprisingly little to say about how projects should be run. It is focused almost entirely at the strategic level with its primary concern being the re-establishment of trust between Government and Defence.[iii] By far the most important change for projects was the removal of templates and the requirement to tailor the document suite.

The removal of templates was intended (like CDMRT) to improve the quality of information at and above 2-star level. While Word and Excel templates are probably not a very good way to provide structure for capability planning and design, removing them without providing something in their place appears to have had some unexpected consequences. These are represented in Figure 2.

Figure 2 / Impact of removing structure

The tailored approval documents make inputs to senior decision makers a little cleaner, which in-turn reduces Interpretation effort and increases time for Thinking. This is good, but the cost dramatically outweighs that benefit.

- The effort to create approval documents grew, because it is harder to create bespoke documents than it is to populate templates.

- The Modelling effort shrinks because: a. Explaining effort has increased, and b. the templates, which previously provided Modelling guidance have been removed or at least their authority greatly diminished.

- Because Modelling has shrunk, and templates have largely gone, there is now even less structure in the already poorly structured information store, causing inputs to be noisier.

- Interpretation effort increases.

- Thinking decreases; therefore, quality decreases, and we are accelerating on a downward spiral.

The 2-star level is enjoying the benefits of a streamlined process, but that may be a veneer of order on a crumbling system. Because capability development projects are extremely complex it is often impossible to tell the difference between high-quality information and low-quality information, especially when looking at a substantially condensed version. Quality is largely determined by the strength and validity of the chain of evidence, and only a tiny fraction of that is presented to senior decision makers. So, although they have a good amount of time for Thinking, they may be Thinking about the wrong options, risks, and issues.

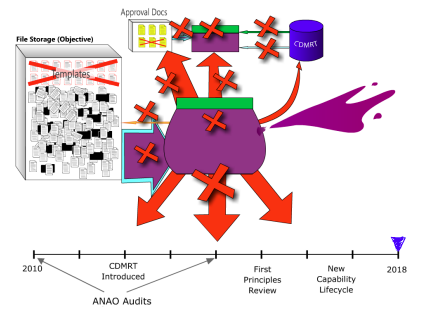

To make matters worse, three big ideas of the new CLC add complexity at the project and program level. These are: 1) the ‘programmatic approach’ seeking to enhance integration and interoperability between projects; 2) a drive to design capabilities with consideration for all Fundamental Inputs to Capability (FIC); and 3) Industry as a FIC.[iv]

These all increase the Explaining and Interpreting requirement for project teams that are already stretched beyond their capacity. So, the programs and projects have lost structure and gained complexity.

Figure 3 / The looming crisis

The result (represented in Figure 3) is that we become overwhelmed by our own content. There is simply too much unstructured data to make sense of. The most damaging symptoms of this situation include:

- repetition or loss of the work of predecessors or other projects,

- a weak or absent chain of evidence,

- incompatible system interfaces,

- architectures that obstruct innovation rather than facilitate integration,

- incoherent or inconsistent messaging to political decision makers,

- increasing demand for reports and returns,

- arbitrary prioritisation of work,

- burnt out staff, and of course,

- lots of contractors.

The Solution

While there may be many potential areas of improvement, the most pressing need is to provide capability project teams and programs with structure and to get information out of binary documents and into a database.

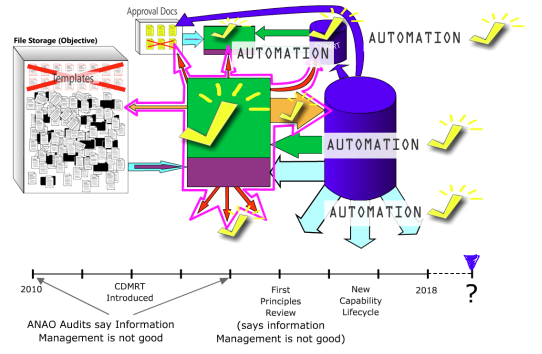

Figure 4 / Solution – database enabled automation

Figure 4: Solution – database enabled automation

Modern databases are both powerful and user friendly. This is an enormous capability that Defence pays for but doesn’t use.[v] A well-designed database could at least double productivity. Most reports and returns could be automated if information were correctly stored in a database. A database provides clean data to users based on their context and their immediate needs without any intervention by project staff. This massively reduces Explaining effort as illustrated in Figure 4.

In addition, a database performs analysis and testing that would otherwise not be done. It is often the kind that humans are not very good at. For example, the database might:

- flag potentially incompatible interfaces within the project or with other projects,

- find you all the requirements that are not yet satisfied by at least one sub-requirement or component,

- warn you when there is a high estimated cost to achieve system features that are only needed for low priority tasks, or

- run through a stochastic simulation a billion times to help you optimise system components or schedules.

With the machines doing what they do best, humans can concentrate on doing what they do best, which is reframing wicked problems, conceiving options, and iteratively moving toward viable solutions.[vi] Critical to this effort is teaching the workforce how to effectively model a project – the big orange arrow in Figure 4.

It is important to avoid getting hung up on tools and instead focus on data structures and methodology. If we get these right (or at least on the right track), there are many tools that can do the job and they all work more-or-less the same way. Good data structures and methodologies will be tool agnostic allowing us to move between tools as needed. The important thing is that everyone understands the data structures, which are the same for all staff regardless of expertise.

Data Structures

The design of data structures is generally referred to as a schema or a meta-model. Figure 5 is an example of a Database Schema that might be close to what we need. It is presented for illustrative purposes only. The critical feature of any database is the ability to keep track of relationships between entities. Even a novice who has never seen a database design will immediately recognise what this schema is trying to achieve. Scenarios have Activities, which have Requirements, which are achieved with Technologies, which are combined into Solutions. Requirements are validated by Tests, which once executed are stored in a Tests Register, and so on. A project model is built up over time by populating the tables holding data for each of these entities. The model represents the capability design and the chain of evidence that supports every proposition related to that design. If information is not part of the design or part of the evidence base for related propositions, then it is probably noise and belongs in the trash.

Figure 5 / Draft capability development schema

Structuring project information in this way (or similar) will be beneficial for any individual project, but the greatest benefits will be realised when data structures are standardised across all projects. A standard schema will become the foundation of a common language without which our projects cannot be integrated and deconflicted.

Implementation

For a start it is important to recognise that a lot of good work has already been done. Several projects are implementing some aspects of a schema-based approach utilising contracted systems engineering support.[vii] At least four architecture design efforts led variously by Land Network Integration Centre, VCDF Group, Soldier Combat Systems Program, and the Combat Service Support Program are making use of a database model. Perhaps the most promising line of effort is the Integration and Interoperability Framework (I2F) under development by Capability Integration, Test & Evaluation Branch (CIT&E).[viii] A full discussion of these efforts is beyond the scope of this paper, suffice to say they will be largely wasted if there is not a concurrent effort by the various capability divisions to develop and implement a standardised schema-based approach.

For the design of data structures, existing standards will take us much of the way to a viable solution.[ix] Greater effort may be required to develop methodologies appropriate to our workforce and the capability lifecycle. We will need a basic course that covers everything most desk officers need to know in less than a week. That training should be delivered online and supported by specialists. Specialists should be allocated one per program (or thereabouts) in perpetuity and funded by projects until Defence can grow the capability.

We should adopt (not develop) a simple tool that will be used by most people and taught on the basic course. Specialists may choose to use a variety of other tools, several of which Defence already has available. The database must be common to all users, but the method of interaction will vary depending on the operator’s expertise.

Overcoming Institutional and Cultural Barriers

An Unfortunate Name

About a decade ago some well-meaning engineers branded this approach (or something like it) Model Based Systems Engineering (MBSE). Unfortunately, that name has caught on. Not only is it completely meaningless but it has hindered adoption for two reasons. First, MBSE sounds scary, and sounds like a specialisation for advanced systems engineers. The intent of the methodology is quite the opposite. It attempts to bring non-engineer stakeholders and SMEs into the capability design effort and establish a model that spans the system’s entire lifecycle. Second, the name implies there is a valid alternative. There really is not, except perhaps for the design of very simple and very independent systems the likes of which none of us is concerned with. MBSE is simply joining things up to create a coherent model and a chain of evidence.[x]

Internal Objections

There are three likely objections I feel compelled to briefly address. Firstly, it will be argued (primarily by CIOG) that Enterprise Resource Planning (ERP) may take care of this so we should wait and see what it delivers. Secondly, it will be argued that our workforce is not up to it. And thirdly, it will be argued that we continue to deliver projects without this approach, so it cannot be all that important. A full rebuttal of these objections is beyond the scope of this article, but I will give them each a short burst.

ERP is unlikely to provide anything in direct support of capability development. At best it may (in about four years or so) provide a useful tool that is connected to other areas of Defence. However, as previously alluded, tool selection is a relatively trivial and easily reversible decision. Waiting for ERP will simply delay this important reform for at least four years with no benefit.

Concerns about workforce competence are valid. The mitigation is to have a scalable methodology. The most basic processes should be intuitive to anyone that can open and edit an Excel spreadsheet. This reform could also be used as a context within which to crack down on the generally appalling information management practices across the organisation, the elimination of which would itself reap substantial gains.

The third objection will be the most difficult to overcome. Despite substantial evidence to the contrary, people will continue to believe they can model their projects perfectly well in Microsoft Word and Excel. There may be several human biases at play here. First, we (humans) substantially underestimate exponential growth.[xi] [xii] We therefore dramatically underestimate the number of options and risks that we are not considering. Albert Bartlett considered this the ‘greatest shortcoming of the human race.’[xiii] Exacerbating our exponential growth bias are confirmation bias and overconfidence bias. The former causes people to block information that does not resonate with their preferred narrative. The latter means 'the confidence that individuals have in their beliefs depends mostly on the quality of the story they can tell about what they see, even if they see little.’[xiv]

Thank you for reading. I would be very grateful for any feedback or alternative views.

Thanks for taking the time to writing an insightful and open article with some valuable self-reflection for Defence.

After nearly 10 years of applying “MBSE” to numerous projects I’ve seen many of the benefits you raise realised in projects. To me, and as your articulate, the benefits come from structuring the currently unstructured information. As our projects become increasingly complex and integrated, the volume of unstructured information will only increase. A model, with its underlying structure (captured in a schema) is a way to address this. This also enforces a greater level of engineering rigor in the development of the information repository. For example, viewing the model it is immediately obvious where gap exist in the information. This is not easily achievable, or even possible, in the unstructured information found in documents and spreadsheets. I believe we need to make the shift to “MBSE” sooner rather than later.

I read your statement that “…well-designed database could at least double productivity” and initially thought this might be overly optimistic. I then considered the downstream savings and not just the cost of producing the information. For example, your conclusions that a model would reduce the interpretation effort should be included in the calculation. Whilst I still think the assertion is somewhat optimistic, its more true than I first thought.

Yes, its important to focus on data structures and associated methodology first. Next comes the ability to analyse the model, then consideration of graphical languages and finally choose the tool that best matches these needs. Good Systems Engineering; solution follows needs.

You are correct about the “MBSE” naming. I guess for the engineers at the time it was very descriptive and differentiated their approach from the traditional (document-centric) approach. To me, and as you indicate, it should be just called (best practice) Systems Engineering.

What else can “MBSE” provide? You might want to check my INCOSE IS Keynote:

https://www.youtube.com/watch?v=TfAuIo_GWTo

You may also be interested in the early work in this field:

https://www.shoalgroup.com/wp-content/uploads/2017/06/Robinson-et-al-2010-Demonstrating-Model-Based-Systems-Engineering-for-Specifying-Complex-Capability-SETE-2010.pdf

Thanks again.